This is a three part post on using Intel's Realsense depth cameras in a digital theme park, particularly for detecting people from a top-view position. The detected positions are used as input to provide visual effects projected back onto the floor, or in some cases, onto a ball pool.

The following image will probably better explain the setup used.

The following image will probably better explain the setup used.

If you look closely, there are three Realsense cameras attached to the ceiling close to the projectors.

The targets of the project are:

- We want to project wave ripple effects whenever someone is present.

- The effect center is where the person is standing and ripples out just like a ripple in the water.

In this first part, I will share how we decided to detect a person using Realsense D415 cameras.

Person Detection Process

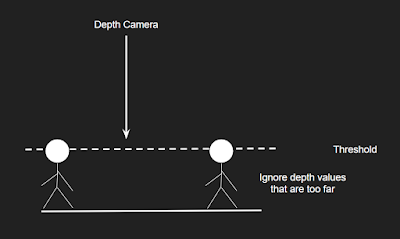

There are several ways to detect people, but since we do not need a very accurate position information I found that one of the easiest ways is to utilize Realsense's depth sensor to detect people's heads. This is done by thresholding the depth image at a certain value as shown in the following figure.

In the actual implementation, because I need to detect kids as well as adults, I ended up using two range values as thresholds.

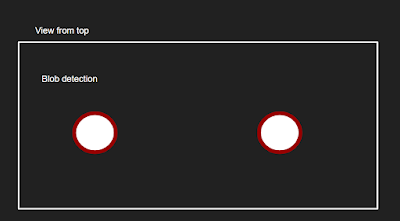

After thresholding depth, we get an image similar to the image below.

After thresholding depth, we get an image similar to the image below.

In reality, the image is noisy and the blobs are not clean circles. However, the above illustration should convey the general idea of the algorithm. Therefore the output of the sensor is an array of blob positions that correspond to people's positions.

[(x1, y1), (x2, y2), ... , (xn, yn)]

Keep in mind that the above positions are seen from one Realsense device, with a resolution of 1280x720. In the next part, I will share on the data merging between several Realsense cameras.

See Part 3 here: http://www.dailytinkerer.com/2020/04/image-processing-with-intel-realsense_18.html

Appendix : Trial and Experiments

When considering how to easily detect people from top view, and still maintain a certain accuracy, I tried to implement the detection method using paper cups as experiments. It took several tuning and experiments before I could be sure the method would work relatively well.

Paper cups taped to the wall and detected by the depth camera

This setup also served as the basis of multi camera calibration for my system later on.

The paper cups height from the wall

As seen in the image, the depth camera was able to determine the height (depth) of the paper cups. This eventually became the base for the people detection system mentioned in the previous section.

Comments

Post a Comment